diff options

| author | KN4CK3R | 2023-02-05 11:12:31 +0100 |

|---|---|---|

| committer | GitHub | 2023-02-05 18:12:31 +0800 |

| commit | df789d962b3d568a77773dd53f98eb37c8bd1f6b (patch) | |

| tree | 37f3548dac506fa668956d9c2a524bd63ce4e8b3 /services | |

| parent | 7baeb9c52a69eb6f7e0973986f2a6bebdd6352d0 (diff) | |

Add Cargo package registry (#21888)

This PR implements a [Cargo registry](https://doc.rust-lang.org/cargo/)

to manage Rust packages. This package type was a little bit more

complicated because Cargo needs an additional Git repository to store

its package index.

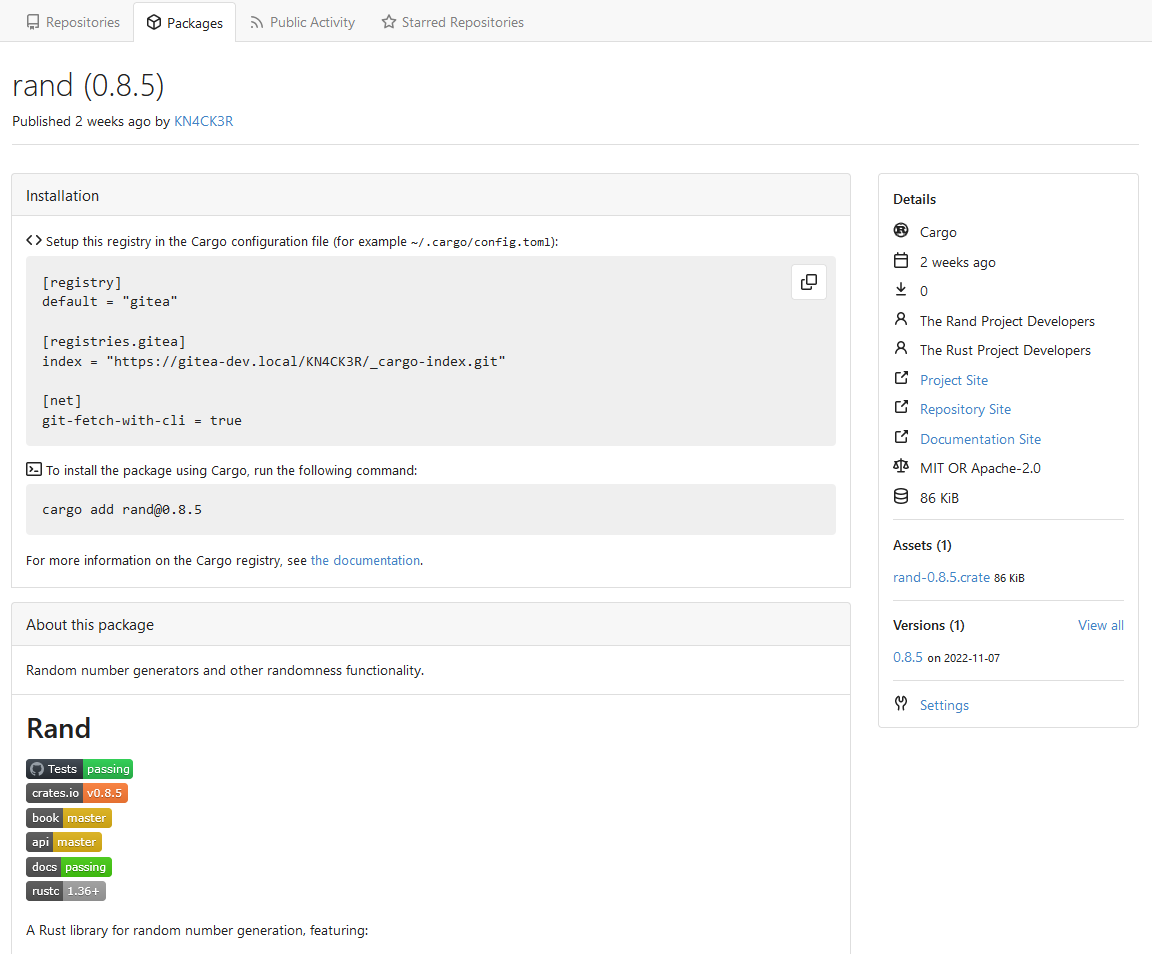

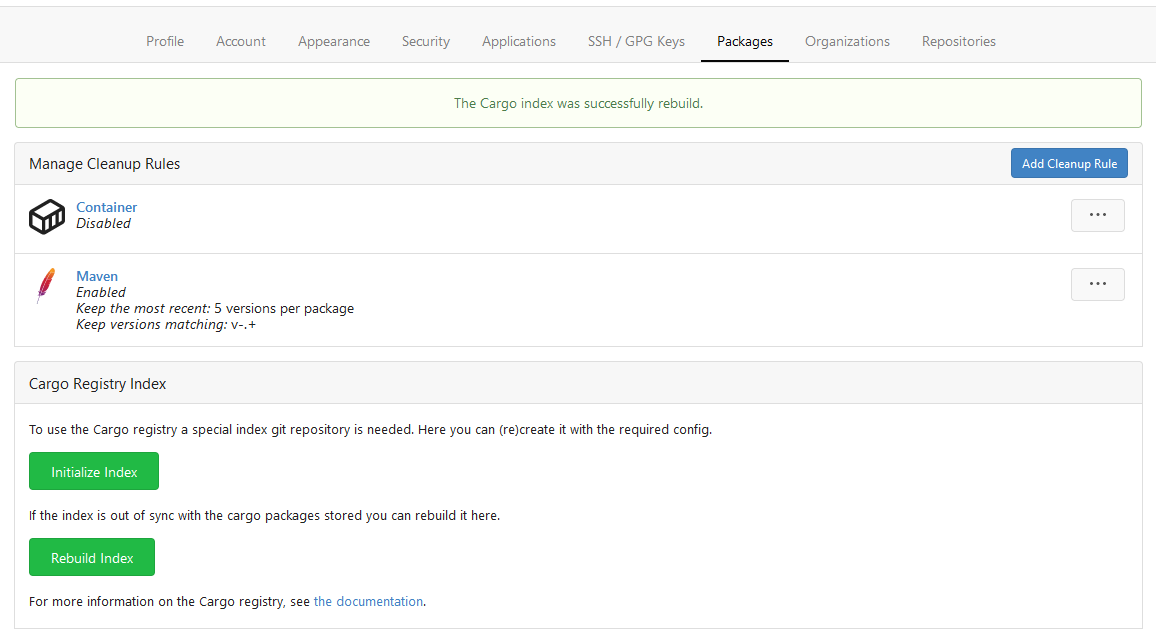

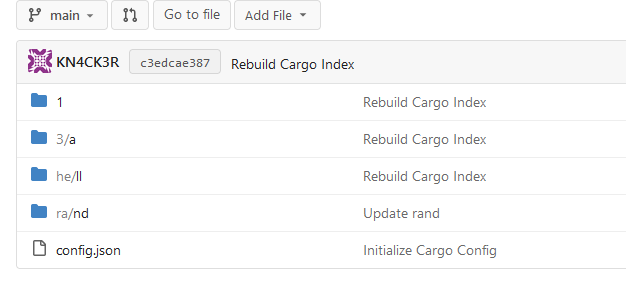

Screenshots:

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Diffstat (limited to 'services')

| -rw-r--r-- | services/cron/tasks_basic.go | 4 | ||||

| -rw-r--r-- | services/forms/package_form.go | 2 | ||||

| -rw-r--r-- | services/packages/cargo/index.go | 290 | ||||

| -rw-r--r-- | services/packages/cleanup/cleanup.go | 154 | ||||

| -rw-r--r-- | services/packages/packages.go | 121 |

5 files changed, 449 insertions, 122 deletions

diff --git a/services/cron/tasks_basic.go b/services/cron/tasks_basic.go index aad0e3959..2e6560ec0 100644 --- a/services/cron/tasks_basic.go +++ b/services/cron/tasks_basic.go @@ -16,7 +16,7 @@ import ( "code.gitea.io/gitea/services/auth" "code.gitea.io/gitea/services/migrations" mirror_service "code.gitea.io/gitea/services/mirror" - packages_service "code.gitea.io/gitea/services/packages" + packages_cleanup_service "code.gitea.io/gitea/services/packages/cleanup" repo_service "code.gitea.io/gitea/services/repository" archiver_service "code.gitea.io/gitea/services/repository/archiver" ) @@ -152,7 +152,7 @@ func registerCleanupPackages() { OlderThan: 24 * time.Hour, }, func(ctx context.Context, _ *user_model.User, config Config) error { realConfig := config.(*OlderThanConfig) - return packages_service.Cleanup(ctx, realConfig.OlderThan) + return packages_cleanup_service.Cleanup(ctx, realConfig.OlderThan) }) } diff --git a/services/forms/package_form.go b/services/forms/package_form.go index e78e64ef7..558ed54b6 100644 --- a/services/forms/package_form.go +++ b/services/forms/package_form.go @@ -15,7 +15,7 @@ import ( type PackageCleanupRuleForm struct { ID int64 Enabled bool - Type string `binding:"Required;In(composer,conan,conda,container,generic,helm,maven,npm,nuget,pub,pypi,rubygems,vagrant)"` + Type string `binding:"Required;In(cargo,composer,conan,conda,container,generic,helm,maven,npm,nuget,pub,pypi,rubygems,vagrant)"` KeepCount int `binding:"In(0,1,5,10,25,50,100)"` KeepPattern string `binding:"RegexPattern"` RemoveDays int `binding:"In(0,7,14,30,60,90,180)"` diff --git a/services/packages/cargo/index.go b/services/packages/cargo/index.go new file mode 100644 index 000000000..e58a47281 --- /dev/null +++ b/services/packages/cargo/index.go @@ -0,0 +1,290 @@ +// Copyright 2022 The Gitea Authors. All rights reserved. +// SPDX-License-Identifier: MIT + +package cargo + +import ( + "bytes" + "context" + "errors" + "fmt" + "io" + "path" + "strconv" + "time" + + packages_model "code.gitea.io/gitea/models/packages" + repo_model "code.gitea.io/gitea/models/repo" + user_model "code.gitea.io/gitea/models/user" + "code.gitea.io/gitea/modules/git" + "code.gitea.io/gitea/modules/json" + cargo_module "code.gitea.io/gitea/modules/packages/cargo" + repo_module "code.gitea.io/gitea/modules/repository" + "code.gitea.io/gitea/modules/setting" + "code.gitea.io/gitea/modules/util" + files_service "code.gitea.io/gitea/services/repository/files" +) + +const ( + IndexRepositoryName = "_cargo-index" + ConfigFileName = "config.json" +) + +// https://doc.rust-lang.org/cargo/reference/registries.html#index-format + +func BuildPackagePath(name string) string { + switch len(name) { + case 0: + panic("Cargo package name can not be empty") + case 1: + return path.Join("1", name) + case 2: + return path.Join("2", name) + case 3: + return path.Join("3", string(name[0]), name) + default: + return path.Join(name[0:2], name[2:4], name) + } +} + +func InitializeIndexRepository(ctx context.Context, doer, owner *user_model.User) error { + repo, err := getOrCreateIndexRepository(ctx, doer, owner) + if err != nil { + return err + } + + if err := createOrUpdateConfigFile(ctx, repo, doer, owner); err != nil { + return fmt.Errorf("createOrUpdateConfigFile: %w", err) + } + + return nil +} + +func RebuildIndex(ctx context.Context, doer, owner *user_model.User) error { + repo, err := getOrCreateIndexRepository(ctx, doer, owner) + if err != nil { + return err + } + + ps, err := packages_model.GetPackagesByType(ctx, owner.ID, packages_model.TypeCargo) + if err != nil { + return fmt.Errorf("GetPackagesByType: %w", err) + } + + return alterRepositoryContent( + ctx, + doer, + repo, + "Rebuild Cargo Index", + func(t *files_service.TemporaryUploadRepository) error { + // Remove all existing content but the Cargo config + files, err := t.LsFiles() + if err != nil { + return err + } + for i, file := range files { + if file == ConfigFileName { + files[i] = files[len(files)-1] + files = files[:len(files)-1] + break + } + } + if err := t.RemoveFilesFromIndex(files...); err != nil { + return err + } + + // Add all packages + for _, p := range ps { + if err := addOrUpdatePackageIndex(ctx, t, p); err != nil { + return err + } + } + + return nil + }, + ) +} + +func AddOrUpdatePackageIndex(ctx context.Context, doer, owner *user_model.User, packageID int64) error { + repo, err := getOrCreateIndexRepository(ctx, doer, owner) + if err != nil { + return err + } + + p, err := packages_model.GetPackageByID(ctx, packageID) + if err != nil { + return fmt.Errorf("GetPackageByID[%d]: %w", packageID, err) + } + + return alterRepositoryContent( + ctx, + doer, + repo, + "Update "+p.Name, + func(t *files_service.TemporaryUploadRepository) error { + return addOrUpdatePackageIndex(ctx, t, p) + }, + ) +} + +type IndexVersionEntry struct { + Name string `json:"name"` + Version string `json:"vers"` + Dependencies []*cargo_module.Dependency `json:"deps"` + FileChecksum string `json:"cksum"` + Features map[string][]string `json:"features"` + Yanked bool `json:"yanked"` + Links string `json:"links,omitempty"` +} + +func addOrUpdatePackageIndex(ctx context.Context, t *files_service.TemporaryUploadRepository, p *packages_model.Package) error { + pvs, _, err := packages_model.SearchVersions(ctx, &packages_model.PackageSearchOptions{ + PackageID: p.ID, + Sort: packages_model.SortVersionAsc, + }) + if err != nil { + return fmt.Errorf("SearchVersions[%s]: %w", p.Name, err) + } + if len(pvs) == 0 { + return nil + } + + pds, err := packages_model.GetPackageDescriptors(ctx, pvs) + if err != nil { + return fmt.Errorf("GetPackageDescriptors[%s]: %w", p.Name, err) + } + + var b bytes.Buffer + for _, pd := range pds { + metadata := pd.Metadata.(*cargo_module.Metadata) + + dependencies := metadata.Dependencies + if dependencies == nil { + dependencies = make([]*cargo_module.Dependency, 0) + } + + features := metadata.Features + if features == nil { + features = make(map[string][]string) + } + + yanked, _ := strconv.ParseBool(pd.VersionProperties.GetByName(cargo_module.PropertyYanked)) + entry, err := json.Marshal(&IndexVersionEntry{ + Name: pd.Package.Name, + Version: pd.Version.Version, + Dependencies: dependencies, + FileChecksum: pd.Files[0].Blob.HashSHA256, + Features: features, + Yanked: yanked, + Links: metadata.Links, + }) + if err != nil { + return err + } + + b.Write(entry) + b.WriteString("\n") + } + + return writeObjectToIndex(t, BuildPackagePath(pds[0].Package.LowerName), &b) +} + +func getOrCreateIndexRepository(ctx context.Context, doer, owner *user_model.User) (*repo_model.Repository, error) { + repo, err := repo_model.GetRepositoryByOwnerAndName(ctx, owner.Name, IndexRepositoryName) + if err != nil { + if errors.Is(err, util.ErrNotExist) { + repo, err = repo_module.CreateRepository(doer, owner, repo_module.CreateRepoOptions{ + Name: IndexRepositoryName, + }) + if err != nil { + return nil, fmt.Errorf("CreateRepository: %w", err) + } + } else { + return nil, fmt.Errorf("GetRepositoryByOwnerAndName: %w", err) + } + } + + return repo, nil +} + +type Config struct { + DownloadURL string `json:"dl"` + APIURL string `json:"api"` +} + +func createOrUpdateConfigFile(ctx context.Context, repo *repo_model.Repository, doer, owner *user_model.User) error { + return alterRepositoryContent( + ctx, + doer, + repo, + "Initialize Cargo Config", + func(t *files_service.TemporaryUploadRepository) error { + var b bytes.Buffer + err := json.NewEncoder(&b).Encode(Config{ + DownloadURL: setting.AppURL + "api/packages/" + owner.Name + "/cargo/api/v1/crates", + APIURL: setting.AppURL + "api/packages/" + owner.Name + "/cargo", + }) + if err != nil { + return err + } + + return writeObjectToIndex(t, ConfigFileName, &b) + }, + ) +} + +// This is a shorter version of CreateOrUpdateRepoFile which allows to perform multiple actions on a git repository +func alterRepositoryContent(ctx context.Context, doer *user_model.User, repo *repo_model.Repository, commitMessage string, fn func(*files_service.TemporaryUploadRepository) error) error { + t, err := files_service.NewTemporaryUploadRepository(ctx, repo) + if err != nil { + return err + } + defer t.Close() + + var lastCommitID string + if err := t.Clone(repo.DefaultBranch); err != nil { + if !git.IsErrBranchNotExist(err) || !repo.IsEmpty { + return err + } + if err := t.Init(); err != nil { + return err + } + } else { + if err := t.SetDefaultIndex(); err != nil { + return err + } + + commit, err := t.GetBranchCommit(repo.DefaultBranch) + if err != nil { + return err + } + + lastCommitID = commit.ID.String() + } + + if err := fn(t); err != nil { + return err + } + + treeHash, err := t.WriteTree() + if err != nil { + return err + } + + now := time.Now() + commitHash, err := t.CommitTreeWithDate(lastCommitID, doer, doer, treeHash, commitMessage, false, now, now) + if err != nil { + return err + } + + return t.Push(doer, commitHash, repo.DefaultBranch) +} + +func writeObjectToIndex(t *files_service.TemporaryUploadRepository, path string, r io.Reader) error { + hash, err := t.HashObject(r) + if err != nil { + return err + } + + return t.AddObjectToIndex("100644", hash, path) +} diff --git a/services/packages/cleanup/cleanup.go b/services/packages/cleanup/cleanup.go new file mode 100644 index 000000000..2d62a028a --- /dev/null +++ b/services/packages/cleanup/cleanup.go @@ -0,0 +1,154 @@ +// Copyright 2022 The Gitea Authors. All rights reserved. +// SPDX-License-Identifier: MIT + +package container + +import ( + "context" + "fmt" + "time" + + "code.gitea.io/gitea/models/db" + packages_model "code.gitea.io/gitea/models/packages" + user_model "code.gitea.io/gitea/models/user" + "code.gitea.io/gitea/modules/log" + packages_module "code.gitea.io/gitea/modules/packages" + "code.gitea.io/gitea/modules/util" + packages_service "code.gitea.io/gitea/services/packages" + cargo_service "code.gitea.io/gitea/services/packages/cargo" + container_service "code.gitea.io/gitea/services/packages/container" +) + +// Cleanup removes expired package data +func Cleanup(taskCtx context.Context, olderThan time.Duration) error { + ctx, committer, err := db.TxContext(taskCtx) + if err != nil { + return err + } + defer committer.Close() + + err = packages_model.IterateEnabledCleanupRules(ctx, func(ctx context.Context, pcr *packages_model.PackageCleanupRule) error { + select { + case <-taskCtx.Done(): + return db.ErrCancelledf("While processing package cleanup rules") + default: + } + + if err := pcr.CompiledPattern(); err != nil { + return fmt.Errorf("CleanupRule [%d]: CompilePattern failed: %w", pcr.ID, err) + } + + olderThan := time.Now().AddDate(0, 0, -pcr.RemoveDays) + + packages, err := packages_model.GetPackagesByType(ctx, pcr.OwnerID, pcr.Type) + if err != nil { + return fmt.Errorf("CleanupRule [%d]: GetPackagesByType failed: %w", pcr.ID, err) + } + + for _, p := range packages { + pvs, _, err := packages_model.SearchVersions(ctx, &packages_model.PackageSearchOptions{ + PackageID: p.ID, + IsInternal: util.OptionalBoolFalse, + Sort: packages_model.SortCreatedDesc, + Paginator: db.NewAbsoluteListOptions(pcr.KeepCount, 200), + }) + if err != nil { + return fmt.Errorf("CleanupRule [%d]: SearchVersions failed: %w", pcr.ID, err) + } + versionDeleted := false + for _, pv := range pvs { + if pcr.Type == packages_model.TypeContainer { + if skip, err := container_service.ShouldBeSkipped(ctx, pcr, p, pv); err != nil { + return fmt.Errorf("CleanupRule [%d]: container.ShouldBeSkipped failed: %w", pcr.ID, err) + } else if skip { + log.Debug("Rule[%d]: keep '%s/%s' (container)", pcr.ID, p.Name, pv.Version) + continue + } + } + + toMatch := pv.LowerVersion + if pcr.MatchFullName { + toMatch = p.LowerName + "/" + pv.LowerVersion + } + + if pcr.KeepPatternMatcher != nil && pcr.KeepPatternMatcher.MatchString(toMatch) { + log.Debug("Rule[%d]: keep '%s/%s' (keep pattern)", pcr.ID, p.Name, pv.Version) + continue + } + if pv.CreatedUnix.AsLocalTime().After(olderThan) { + log.Debug("Rule[%d]: keep '%s/%s' (remove days)", pcr.ID, p.Name, pv.Version) + continue + } + if pcr.RemovePatternMatcher != nil && !pcr.RemovePatternMatcher.MatchString(toMatch) { + log.Debug("Rule[%d]: keep '%s/%s' (remove pattern)", pcr.ID, p.Name, pv.Version) + continue + } + + log.Debug("Rule[%d]: remove '%s/%s'", pcr.ID, p.Name, pv.Version) + + if err := packages_service.DeletePackageVersionAndReferences(ctx, pv); err != nil { + return fmt.Errorf("CleanupRule [%d]: DeletePackageVersionAndReferences failed: %w", pcr.ID, err) + } + + versionDeleted = true + } + + if versionDeleted { + if pcr.Type == packages_model.TypeCargo { + owner, err := user_model.GetUserByID(ctx, pcr.OwnerID) + if err != nil { + return fmt.Errorf("GetUserByID failed: %w", err) + } + if err := cargo_service.AddOrUpdatePackageIndex(ctx, owner, owner, p.ID); err != nil { + return fmt.Errorf("CleanupRule [%d]: cargo.AddOrUpdatePackageIndex failed: %w", pcr.ID, err) + } + } + } + } + return nil + }) + if err != nil { + return err + } + + if err := container_service.Cleanup(ctx, olderThan); err != nil { + return err + } + + ps, err := packages_model.FindUnreferencedPackages(ctx) + if err != nil { + return err + } + for _, p := range ps { + if err := packages_model.DeleteAllProperties(ctx, packages_model.PropertyTypePackage, p.ID); err != nil { + return err + } + if err := packages_model.DeletePackageByID(ctx, p.ID); err != nil { + return err + } + } + + pbs, err := packages_model.FindExpiredUnreferencedBlobs(ctx, olderThan) + if err != nil { + return err + } + + for _, pb := range pbs { + if err := packages_model.DeleteBlobByID(ctx, pb.ID); err != nil { + return err + } + } + + if err := committer.Commit(); err != nil { + return err + } + + contentStore := packages_module.NewContentStore() + for _, pb := range pbs { + if err := contentStore.Delete(packages_module.BlobHash256Key(pb.HashSHA256)); err != nil { + log.Error("Error deleting package blob [%v]: %v", pb.ID, err) + } + } + + return nil +} diff --git a/services/packages/packages.go b/services/packages/packages.go index 9e52cb145..f50284075 100644 --- a/services/packages/packages.go +++ b/services/packages/packages.go @@ -10,7 +10,6 @@ import ( "fmt" "io" "strings" - "time" "code.gitea.io/gitea/models/db" packages_model "code.gitea.io/gitea/models/packages" @@ -22,7 +21,6 @@ import ( packages_module "code.gitea.io/gitea/modules/packages" "code.gitea.io/gitea/modules/setting" "code.gitea.io/gitea/modules/util" - container_service "code.gitea.io/gitea/services/packages/container" ) var ( @@ -335,6 +333,8 @@ func CheckSizeQuotaExceeded(ctx context.Context, doer, owner *user_model.User, p var typeSpecificSize int64 switch packageType { + case packages_model.TypeCargo: + typeSpecificSize = setting.Packages.LimitSizeCargo case packages_model.TypeComposer: typeSpecificSize = setting.Packages.LimitSizeComposer case packages_model.TypeConan: @@ -448,123 +448,6 @@ func DeletePackageFile(ctx context.Context, pf *packages_model.PackageFile) erro return packages_model.DeleteFileByID(ctx, pf.ID) } -// Cleanup removes expired package data -func Cleanup(taskCtx context.Context, olderThan time.Duration) error { - ctx, committer, err := db.TxContext(taskCtx) - if err != nil { - return err - } - defer committer.Close() - - err = packages_model.IterateEnabledCleanupRules(ctx, func(ctx context.Context, pcr *packages_model.PackageCleanupRule) error { - select { - case <-taskCtx.Done(): - return db.ErrCancelledf("While processing package cleanup rules") - default: - } - - if err := pcr.CompiledPattern(); err != nil { - return fmt.Errorf("CleanupRule [%d]: CompilePattern failed: %w", pcr.ID, err) - } - - olderThan := time.Now().AddDate(0, 0, -pcr.RemoveDays) - - packages, err := packages_model.GetPackagesByType(ctx, pcr.OwnerID, pcr.Type) - if err != nil { - return fmt.Errorf("CleanupRule [%d]: GetPackagesByType failed: %w", pcr.ID, err) - } - - for _, p := range packages { - pvs, _, err := packages_model.SearchVersions(ctx, &packages_model.PackageSearchOptions{ - PackageID: p.ID, - IsInternal: util.OptionalBoolFalse, - Sort: packages_model.SortCreatedDesc, - Paginator: db.NewAbsoluteListOptions(pcr.KeepCount, 200), - }) - if err != nil { - return fmt.Errorf("CleanupRule [%d]: SearchVersions failed: %w", pcr.ID, err) - } - for _, pv := range pvs { - if skip, err := container_service.ShouldBeSkipped(ctx, pcr, p, pv); err != nil { - return fmt.Errorf("CleanupRule [%d]: container.ShouldBeSkipped failed: %w", pcr.ID, err) - } else if skip { - log.Debug("Rule[%d]: keep '%s/%s' (container)", pcr.ID, p.Name, pv.Version) - continue - } - - toMatch := pv.LowerVersion - if pcr.MatchFullName { - toMatch = p.LowerName + "/" + pv.LowerVersion - } - - if pcr.KeepPatternMatcher != nil && pcr.KeepPatternMatcher.MatchString(toMatch) { - log.Debug("Rule[%d]: keep '%s/%s' (keep pattern)", pcr.ID, p.Name, pv.Version) - continue - } - if pv.CreatedUnix.AsLocalTime().After(olderThan) { - log.Debug("Rule[%d]: keep '%s/%s' (remove days)", pcr.ID, p.Name, pv.Version) - continue - } - if pcr.RemovePatternMatcher != nil && !pcr.RemovePatternMatcher.MatchString(toMatch) { - log.Debug("Rule[%d]: keep '%s/%s' (remove pattern)", pcr.ID, p.Name, pv.Version) - continue - } - - log.Debug("Rule[%d]: remove '%s/%s'", pcr.ID, p.Name, pv.Version) - - if err := DeletePackageVersionAndReferences(ctx, pv); err != nil { - return fmt.Errorf("CleanupRule [%d]: DeletePackageVersionAndReferences failed: %w", pcr.ID, err) - } - } - } - return nil - }) - if err != nil { - return err - } - - if err := container_service.Cleanup(ctx, olderThan); err != nil { - return err - } - - ps, err := packages_model.FindUnreferencedPackages(ctx) - if err != nil { - return err - } - for _, p := range ps { - if err := packages_model.DeleteAllProperties(ctx, packages_model.PropertyTypePackage, p.ID); err != nil { - return err - } - if err := packages_model.DeletePackageByID(ctx, p.ID); err != nil { - return err - } - } - - pbs, err := packages_model.FindExpiredUnreferencedBlobs(ctx, olderThan) - if err != nil { - return err - } - - for _, pb := range pbs { - if err := packages_model.DeleteBlobByID(ctx, pb.ID); err != nil { - return err - } - } - - if err := committer.Commit(); err != nil { - return err - } - - contentStore := packages_module.NewContentStore() - for _, pb := range pbs { - if err := contentStore.Delete(packages_module.BlobHash256Key(pb.HashSHA256)); err != nil { - log.Error("Error deleting package blob [%v]: %v", pb.ID, err) - } - } - - return nil -} - // GetFileStreamByPackageNameAndVersion returns the content of the specific package file func GetFileStreamByPackageNameAndVersion(ctx context.Context, pvi *PackageInfo, pfi *PackageFileInfo) (io.ReadSeekCloser, *packages_model.PackageFile, error) { log.Trace("Getting package file stream: %v, %v, %s, %s, %s, %s", pvi.Owner.ID, pvi.PackageType, pvi.Name, pvi.Version, pfi.Filename, pfi.CompositeKey) |